Using pyobis to query for known datasets#

Created: 2022-11-23

Updated: 2023-03-24

Author: Mathew Biddle

This notebook uses the pyobis Python package to query the OBIS API for datasets associated with projects funded under the United States Marine Biodiversity Observation Network. The notebook walks through the process for querying the OBIS api for a specific institution, then using the resultant datasets to gather the locations of all the occurrences using the pyobis package.

The US Marine Biodiversity Observation Network (US MBON) is an interagency initiative that seeks to coordinate across sectors and government to characterize biodiversity and understand drivers of change. US MBON represents a broad, collaborative effort to address the need for systematic collection and sharing of marine life information, ensure that information is available for decision-making and management from local to national levels, and document marine biodiversity status and trends in the face of human- and climate-induced change using a range of technologies and approaches. Through the National Oceanographic Partnership Program, NOAA, NASA, Office of Naval Research, and BOEM have invested in US MBON since 2014, most recently announcing new five year projects in 2022.

import pyobis

pyobis.__version__

'1.3.1'

First off, let’s look through the available institutions at OBIS and find the US MBON one.

Hint: We know the name of the institution is United States Marine Biodiversity Observation Network.

import pandas as pd

pd.set_option("max_colwidth", 400)

url = "https://api.obis.org/v3/institute"

institutes = pd.read_json(url)

df_inst = pd.DataFrame.from_records(institutes["results"])

df_inst.dropna(inplace=True)

df_inst["id"] = df_inst["id"].astype(int)

institution = df_inst.loc[

df_inst["name"].str.contains(

"United States Marine Biodiversity Observation", na=False

)

]

institution

| id | name | country | records | |

|---|---|---|---|---|

| 61 | 23070 | United States Marine Biodiversity Observation Network | United States of America | 1620917 |

Well that looks like the institution we’re after!

Using the id we can check it out on the OBIS website: https://obis.org/institute/23070

Yes, that does look like what we want. Now let’s use that id to query OBIS for all associated datasets.

institution_id = institution["id"].tolist()[0]

institution_id

23070

Collect metadata about all of the datasets#

Here we use the institution_id collected from OBIS to query the API for metadata on the datasets associated with that id.

First, we build the API call, then we bring it into a Pandas DataFrame and create additional fields for use later.

import pyobis

query = pyobis.dataset.search(instituteid=institution_id)

df = pd.DataFrame(query.execute())

df_meta = pd.DataFrame.from_records(df["results"])

# create a column for the human readable short name of the datasets

df_meta["short_name"] = df_meta["url"].str.split("=", expand=True)[1]

# create another column to group datasets by, which removes the year from the short names

df_meta["short_name_group"] = df_meta["short_name"].replace("\d", "", regex=True)

df_meta.head(5)

<>:13: SyntaxWarning: invalid escape sequence '\d'

<>:13: SyntaxWarning: invalid escape sequence '\d'

/tmp/ipykernel_72684/2509542522.py:13: SyntaxWarning: invalid escape sequence '\d'

df_meta["short_name_group"] = df_meta["short_name"].replace("\d", "", regex=True)

| id | url | archive | published | created | updated | core | extensions | statistics | extent | ... | intellectualrights | feed | institutes | contacts | nodes | keywords | downloads | records | short_name | short_name_group | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | cfceb150-bbe2-4efb-8682-14cfc7167e7c | https://ipt-obis.gbif.us/resource?r=rcca_transects | https://ipt-obis.gbif.us/archive.do?r=rcca_transects | 2023-01-18T20:38:20.000Z | 2020-12-08T01:07:21.790Z | 2023-06-03T12:51:53.436Z | event | [measurementorfact, occurrence] | {'Event': 19375, 'absence': 487162, 'dropped': 0, 'Occurrence': 724871, 'DNADerivedData': 0, 'MeasurementOrFact': 255300} | POLYGON((-124.294724 32.694233000000004,-124.294724 42.045155,-117.264999 42.045155,-117.264999 32.694233000000004,-124.294724 32.694233000000004)) | ... | To the extent possible under law, the publisher has waived all rights to these data and has dedicated them to the Public Domain (CC0 1.0) | {'id': '8112d75b-7e78-4641-9e09-9a2cc7db7375', 'url': 'https://ipt-obis.gbif.us/rss.do'} | [{'name': 'U.S. Geological Survey HQ', 'oceanexpert_id': 12976, 'oceanexpert_parent_id': None}, {'name': 'United States Marine Biodiversity Observation Network', 'oceanexpert_id': 23070, 'oceanexpert_parent_id': None}, {'name': 'Central & Northern California Ocean Observing System', 'oceanexpert_id': 23204, 'oceanexpert_parent_id': None}, {'name': 'Reef Check Foundation', 'oceanexpert_id': 232... | [{'role': None, 'type': 'creator', 'givenname': 'Jan', 'surname': 'Friewald', 'organization': 'Reef Check Foundation', 'position': 'Executive Director', 'email': 'jfreiwald@reefcheck.org', 'url': None, 'organization_oceanexpert_id': 23205, 'type_display': 'Creator'}, {'role': None, 'type': 'contact', 'givenname': 'Jan', 'surname': 'Friewald', 'organization': 'Reef Check Foundation', 'position'... | [{'id': 'b7c47783-a020-4173-b390-7b57c4fa1426', 'name': 'OBIS USA'}] | [{'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type_2015-07-10.xml'}] | [{'year': 2024, 'downloads': 3849, 'records': 520892938}, {'year': 2023, 'downloads': 3648, 'records': 588494102}, {'year': 2022, 'downloads': 4191, 'records': 426899064}, {'year': 2021, 'downloads': 13776, 'records': 497036713}, {'year': 2020, 'downloads': 747, 'records': 26199396}] | 237709 | rcca_transects | rcca_transects |

| 1 | d50e0443-4d84-4bd9-a5ad-4d417a7607e2 | https://ipt-obis.gbif.us/resource?r=2009floridakeysrvc | https://ipt-obis.gbif.us/archive.do?r=2009floridakeysrvc | 2023-02-21T17:28:10.000Z | None | 2023-06-06T16:14:55.499Z | event | [measurementorfact, occurrence] | {'Event': 2542, 'absence': 353964, 'dropped': 0, 'Occurrence': 451581, 'DNADerivedData': 0, 'MeasurementOrFact': 905704} | POLYGON((-81.9975333 24.4313,-81.9975333 25.7526,-80.08755 25.7526,-80.08755 24.4313,-81.9975333 24.4313)) | ... | To the extent possible under law, the publisher has waived all rights to these data and has dedicated them to the Public Domain (CC0 1.0) | {'id': '8112d75b-7e78-4641-9e09-9a2cc7db7375', 'url': 'https://ipt-obis.gbif.us/rss.do'} | [{'name': 'NOAA, Southeast Fisheries Science Center, National Marine Fisheries Service Southeast Miami Laboratory', 'oceanexpert_id': 7534, 'oceanexpert_parent_id': 11379}, {'name': 'Rosenstiel School of Marine & Atmospheric Science, University of Miami', 'oceanexpert_id': 8124, 'oceanexpert_parent_id': None}, {'name': 'U.S. Geological Survey HQ', 'oceanexpert_id': 12976, 'oceanexpert_parent_i... | [{'role': None, 'type': 'creator', 'givenname': 'Jerald', 'surname': 'Ault', 'organization': 'University of Miami, Rosenstiel School of Marine & Atmospheric Science', 'position': 'Program Lead', 'email': 'jault@rsmas.miami.edu', 'url': None, 'organization_oceanexpert_id': 8124, 'type_display': 'Creator'}, {'role': None, 'type': 'creator', 'givenname': 'Jim', 'surname': 'Bohnsack', 'organiz... | [{'id': 'b7c47783-a020-4173-b390-7b57c4fa1426', 'name': 'OBIS USA'}] | [{'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type.xml'}, {'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type_2015-07-10.xml'}] | [{'year': 2024, 'downloads': 11707, 'records': 216972587}, {'year': 2023, 'downloads': 7679, 'records': 242648438}, {'year': 2022, 'downloads': 7478, 'records': 175113790}, {'year': 2021, 'downloads': 17549, 'records': 204507663}, {'year': 2020, 'downloads': 3443, 'records': 68153160}, {'year': 2019, 'downloads': 293, 'records': 8867930}, {'year': 2018, 'downloads': 1, 'records': 97617}] | 97617 | 2009floridakeysrvc | floridakeysrvc |

| 2 | b4ac3d44-b4bf-49ef-a6e9-e2c02adff2fa | https://ipt-obis.gbif.us/resource?r=drytortugasreefvisualcensus2012 | https://ipt-obis.gbif.us/archive.do?r=drytortugasreefvisualcensus2012 | 2023-02-21T17:50:22.000Z | None | 2023-06-06T09:35:54.566Z | event | [measurementorfact, occurrence] | {'Event': 2280, 'absence': 297153, 'dropped': 0, 'Occurrence': 384195, 'DNADerivedData': 0, 'MeasurementOrFact': 770670} | POLYGON((-83.1035167 24.5519333,-83.1035167 24.7284333,-82.7729667 24.7284333,-82.7729667 24.5519333,-83.1035167 24.5519333)) | ... | To the extent possible under law, the publisher has waived all rights to these data and has dedicated them to the Public Domain (CC0 1.0) | {'id': '8112d75b-7e78-4641-9e09-9a2cc7db7375', 'url': 'https://ipt-obis.gbif.us/rss.do'} | [{'name': 'NOAA, Southeast Fisheries Science Center, National Marine Fisheries Service Southeast Miami Laboratory', 'oceanexpert_id': 7534, 'oceanexpert_parent_id': 11379}, {'name': 'Rosenstiel School of Marine & Atmospheric Science, University of Miami', 'oceanexpert_id': 8124, 'oceanexpert_parent_id': None}, {'name': 'Gulf of Mexico Coastal Ocean Observing System', 'oceanexpert_id': 18936, '... | [{'role': None, 'type': 'creator', 'givenname': 'Jerald', 'surname': 'Ault', 'organization': 'University of Miami, Rosenstiel School of Marine & Atmospheric Science', 'position': 'Project Lead', 'email': 'jault@rsmas.miami.edu', 'url': None, 'organization_oceanexpert_id': 8124, 'type_display': 'Creator'}, {'role': None, 'type': 'creator', 'givenname': 'Jim', 'surname': 'Bohnsack', 'organiz... | [{'id': 'b7c47783-a020-4173-b390-7b57c4fa1426', 'name': 'OBIS USA'}] | [{'keyword': 'Occurrence', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type.xml'}, {'keyword': 'Observation', 'thesaurus': 'GBIF Dataset Subtype Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_subtype.xml'}, {'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type_2015-07-10.xml'}] | [{'year': 2024, 'downloads': 11800, 'records': 192712914}, {'year': 2023, 'downloads': 7461, 'records': 215815493}, {'year': 2022, 'downloads': 7273, 'records': 158822384}, {'year': 2021, 'downloads': 17801, 'records': 178906115}, {'year': 2020, 'downloads': 3353, 'records': 59225761}, {'year': 2019, 'downloads': 299, 'records': 7648000}, {'year': 2018, 'downloads': 1, 'records': 87042}] | 87042 | drytortugasreefvisualcensus2012 | drytortugasreefvisualcensus |

| 3 | 208bbf73-5dac-4d20-a017-5fc7d18a5b48 | https://ipt-obis.gbif.us/resource?r=floridakeysreefvisualcensus2011 | https://ipt-obis.gbif.us/archive.do?r=floridakeysreefvisualcensus2011 | 2023-02-21T18:05:15.000Z | None | 2023-06-05T04:39:24.917Z | event | [measurementorfact, occurrence] | {'Event': 2095, 'absence': 276737, 'dropped': 0, 'Occurrence': 353280, 'DNADerivedData': 0, 'MeasurementOrFact': 708655} | POLYGON((-81.988117 24.43305,-81.988117 25.71506,-80.092217 25.71506,-80.092217 24.43305,-81.988117 24.43305)) | ... | To the extent possible under law, the publisher has waived all rights to these data and has dedicated them to the Public Domain (CC0 1.0) | {'id': '8112d75b-7e78-4641-9e09-9a2cc7db7375', 'url': 'https://ipt-obis.gbif.us/rss.do'} | [{'name': 'NOAA, Southeast Fisheries Science Center, National Marine Fisheries Service Southeast Miami Laboratory', 'oceanexpert_id': 7534, 'oceanexpert_parent_id': 11379}, {'name': 'Rosenstiel School of Marine & Atmospheric Science, University of Miami', 'oceanexpert_id': 8124, 'oceanexpert_parent_id': None}, {'name': 'Gulf of Mexico Coastal Ocean Observing System', 'oceanexpert_id': 18936, '... | [{'role': None, 'type': 'creator', 'givenname': 'Jerald', 'surname': 'Ault', 'organization': 'University of Miami, Rosenstiel School of Marine & Atmospheric Science', 'position': 'Project Lead', 'email': 'jault@rsmas.miami.edu', 'url': None, 'organization_oceanexpert_id': 8124, 'type_display': 'Creator'}, {'role': None, 'type': 'creator', 'givenname': 'Jim', 'surname': 'Bohnsack', 'organiz... | [{'id': 'b7c47783-a020-4173-b390-7b57c4fa1426', 'name': 'OBIS USA'}] | [{'keyword': 'Occurrence', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type.xml'}, {'keyword': 'Observation', 'thesaurus': 'GBIF Dataset Subtype Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_subtype.xml'}, {'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type_2015-07-10.xml'}] | [{'year': 2024, 'downloads': 11481, 'records': 169952503}, {'year': 2023, 'downloads': 7513, 'records': 190581549}, {'year': 2022, 'downloads': 7350, 'records': 139710500}, {'year': 2021, 'downloads': 17831, 'records': 160861289}, {'year': 2020, 'downloads': 3556, 'records': 53543342}, {'year': 2019, 'downloads': 322, 'records': 7049786}, {'year': 2018, 'downloads': 1, 'records': 76543}] | 76543 | floridakeysreefvisualcensus2011 | floridakeysreefvisualcensus |

| 4 | 33f39020-dfb7-4d45-bee3-9e3910792d9d | https://ipt-obis.gbif.us/resource?r=2012floridakeys | https://ipt-obis.gbif.us/archive.do?r=2012floridakeys | 2023-02-21T17:29:53.000Z | None | 2023-06-05T12:51:56.106Z | event | [measurementorfact, occurrence] | {'Event': 2015, 'absence': 290261, 'dropped': 0, 'Occurrence': 366514, 'DNADerivedData': 0, 'MeasurementOrFact': 735043} | POLYGON((-81.9519667 24.4371333,-81.9519667 25.75155,-80.0896667 25.75155,-80.0896667 24.4371333,-81.9519667 24.4371333)) | ... | To the extent possible under law, the publisher has waived all rights to these data and has dedicated them to the Public Domain (CC0 1.0) | {'id': '8112d75b-7e78-4641-9e09-9a2cc7db7375', 'url': 'https://ipt-obis.gbif.us/rss.do'} | [{'name': 'NOAA, Southeast Fisheries Science Center, National Marine Fisheries Service Southeast Miami Laboratory', 'oceanexpert_id': 7534, 'oceanexpert_parent_id': 11379}, {'name': 'Rosenstiel School of Marine & Atmospheric Science, University of Miami', 'oceanexpert_id': 8124, 'oceanexpert_parent_id': None}, {'name': 'U.S. Geological Survey HQ', 'oceanexpert_id': 12976, 'oceanexpert_parent_i... | [{'role': None, 'type': 'creator', 'givenname': 'Jerald', 'surname': 'Ault', 'organization': 'University of Miami, Rosenstiel School of Marine & Atmospheric Science', 'position': 'Project Lead', 'email': 'jault@rsmas.miami.edu', 'url': None, 'organization_oceanexpert_id': 8124, 'type_display': 'Creator'}, {'role': None, 'type': 'creator', 'givenname': 'Jim', 'surname': 'Bohnsack', 'organiz... | [{'id': 'b7c47783-a020-4173-b390-7b57c4fa1426', 'name': 'OBIS USA'}] | [{'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type.xml'}, {'keyword': 'Samplingevent', 'thesaurus': 'GBIF Dataset Type Vocabulary: http://rs.gbif.org/vocabulary/gbif/dataset_type_2015-07-10.xml'}] | [{'year': 2024, 'downloads': 11368, 'records': 169299730}, {'year': 2023, 'downloads': 7385, 'records': 189896655}, {'year': 2022, 'downloads': 7230, 'records': 138680258}, {'year': 2021, 'downloads': 17532, 'records': 160135207}, {'year': 2020, 'downloads': 3446, 'records': 53299681}, {'year': 2019, 'downloads': 341, 'records': 6924827}, {'year': 2018, 'downloads': 1, 'records': 76253}] | 76253 | 2012floridakeys | floridakeys |

5 rows × 24 columns

df_meta[["short_name", "short_name_group"]].describe()

| short_name | short_name_group | |

|---|---|---|

| count | 41 | 41 |

| unique | 41 | 21 |

| top | rcca_transects | floridakeysreefvisualcensus |

| freq | 1 | 15 |

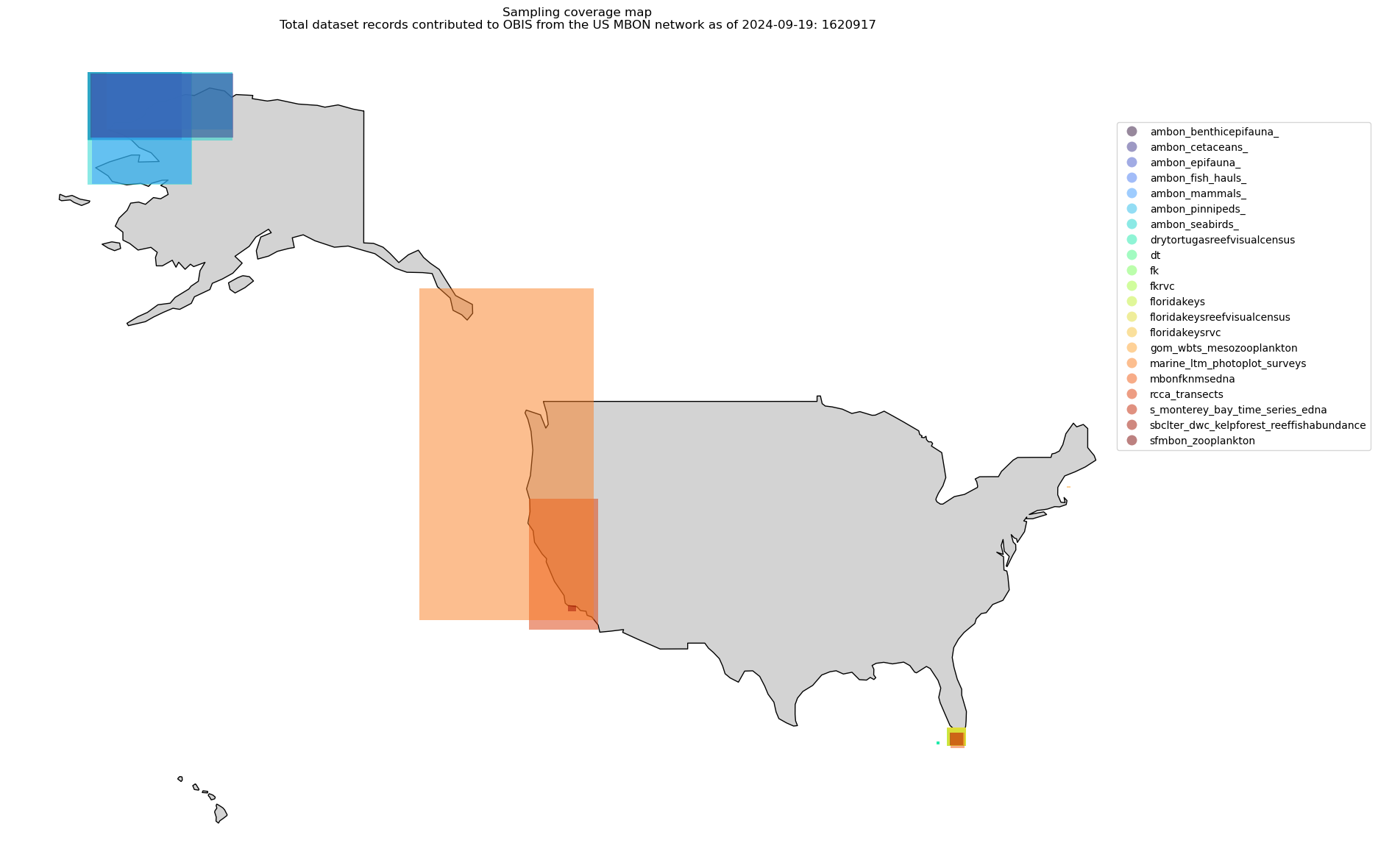

Read to geopandas and plot the bounding boxes#

Next we want to make a simple map of the coverage each dataset has. We can use the extent field directly in geopandas to make a simple map.

from datetime import datetime

import cartopy.io.shapereader as shpreader

import geopandas

import matplotlib.pyplot as plt

gdf = geopandas.GeoDataFrame(

df_meta, geometry=geopandas.GeoSeries.from_wkt(df_meta["extent"])

)

gdf.set_crs("epsg:4326", inplace=True)

fig, ax = plt.subplots(

figsize=(20, 15),

)

gdf.plot(

ax=ax,

zorder=10,

alpha=0.5,

column="short_name_group",

legend=True,

cmap="turbo",

)

leg = ax.get_legend()

leg.set_bbox_to_anchor((0.0, 0.0, 1.2, 0.9))

shpfilename = shpreader.natural_earth(

resolution="110m",

category="cultural",

name="admin_0_countries",

)

world = geopandas.read_file(shpfilename)

world = world.to_crs(gdf.crs)

# bound map to US.

world.loc[world["ISO_A3"] == "USA"].plot(

ax=ax, color="lightgrey", edgecolor="black", zorder=1

)

today = datetime.now().strftime("%Y-%m-%d")

ax.set_title(

f"Sampling coverage map\nTotal dataset records contributed to OBIS from the US MBON network as of {today}: {gdf.records.sum() }"

)

ax.set_axis_off();

gdf.explore(column="short_name")

Save as a geojson#

Since we have the data, why don’t we write this out to a geoJson file to interact with on GitHub.

fname = f"US_MBON_bounding_boxes_{today.replace('-','')}.geojson"

# can"t export fields with lists or dictionaries - breaks the format.

gdf[["id", "title", "url", "geometry"]].to_file(fname, driver="GeoJSON")

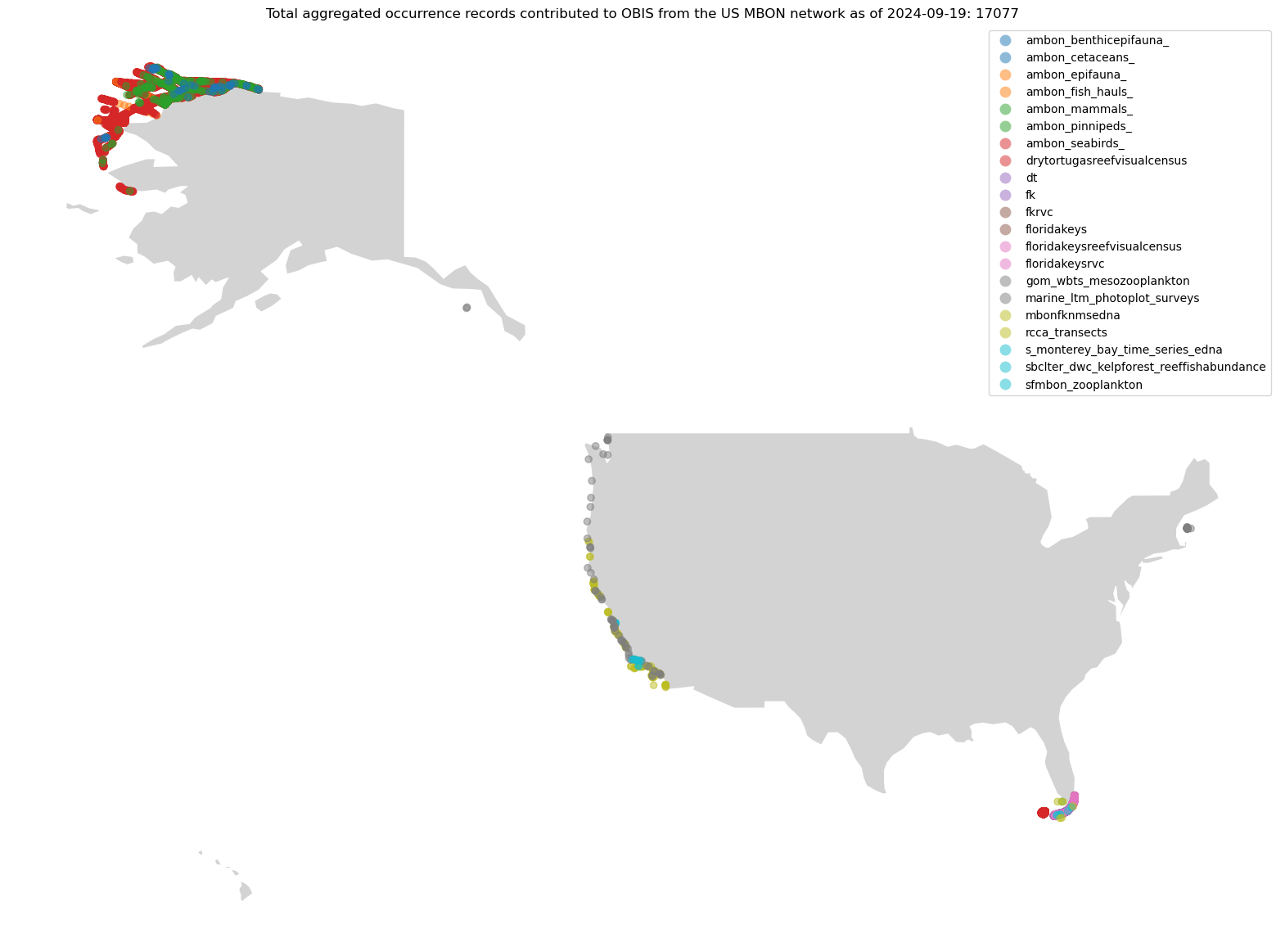

Query the OBIS aggregated points#

Now that we know the general area for where our observations are made, let’s dig a little deeper into each dataset.

We can use pyobis.occurrences.getpoints() to return all of the points for each dataset, aggregated to Geohash precision 8.

If we’d like a little more metadata about each of the aggregated points, we can query by dataset to collect the aggregated points and some useful metadata.

combined = pd.DataFrame()

query = pyobis.dataset.search(instituteid=institution_id)

df = pd.DataFrame(query.execute())

df_meta = pd.DataFrame.from_records(df["results"])

for datasetid in df_meta["id"]:

dset = pyobis.occurrences.getpoints(datasetid=datasetid).execute()

meta = pyobis.dataset.get(id=datasetid).execute()["results"][0]

short_name = meta["url"].split("=")[-1]

df = pd.DataFrame(dset)

df["dataset_id"] = datasetid

df["short_name"] = meta["url"].split("=")[-1]

df["short_name_group"] = df["short_name"].replace("\d", "", regex=True)

df["url"] = meta["url"]

df["metadata"] = str(meta)

df[["decimalLongitude", "decimalLatitude"]] = pd.DataFrame(

df["coordinates"].tolist()

)

combined = pd.concat([combined, df], ignore_index=True)

gdf_ghsh = geopandas.GeoDataFrame(

combined[

["dataset_id", "url", "short_name_group", "decimalLatitude", "decimalLongitude"]

],

geometry=geopandas.points_from_xy(

combined.decimalLongitude, combined.decimalLatitude

),

crs="epsg:4326",

)

fig, ax = plt.subplots(figsize=(20, 15))

gdf_ghsh.plot(

ax=ax,

zorder=10,

alpha=0.5,

column="short_name_group",

legend=True,

)

world.loc[world["ISO_A3"] == "USA"].plot(

ax=ax, color="lightgrey", edgecolor="white", zorder=1

)

now = datetime.now()

today = now.strftime("%Y-%m-%d")

ax.set_title(

f"Total aggregated occurrence records contributed to OBIS from the US MBON network as of {today}: {gdf_ghsh.shape[0]}"

)

ax.set_axis_off();

<>:18: SyntaxWarning: invalid escape sequence '\d'

<>:18: SyntaxWarning: invalid escape sequence '\d'

/tmp/ipykernel_72684/2245479231.py:18: SyntaxWarning: invalid escape sequence '\d'

df["short_name_group"] = df["short_name"].replace("\d", "", regex=True)

Let’s explore those points a little more with geopandas.GeoDataFrame.explore().#

This allows you to create an interactive map based on folium/leaflet.jsInteractive map based on GeoPandas and folium/leaflet.js

gdf_ghsh.explore(column="short_name_group")

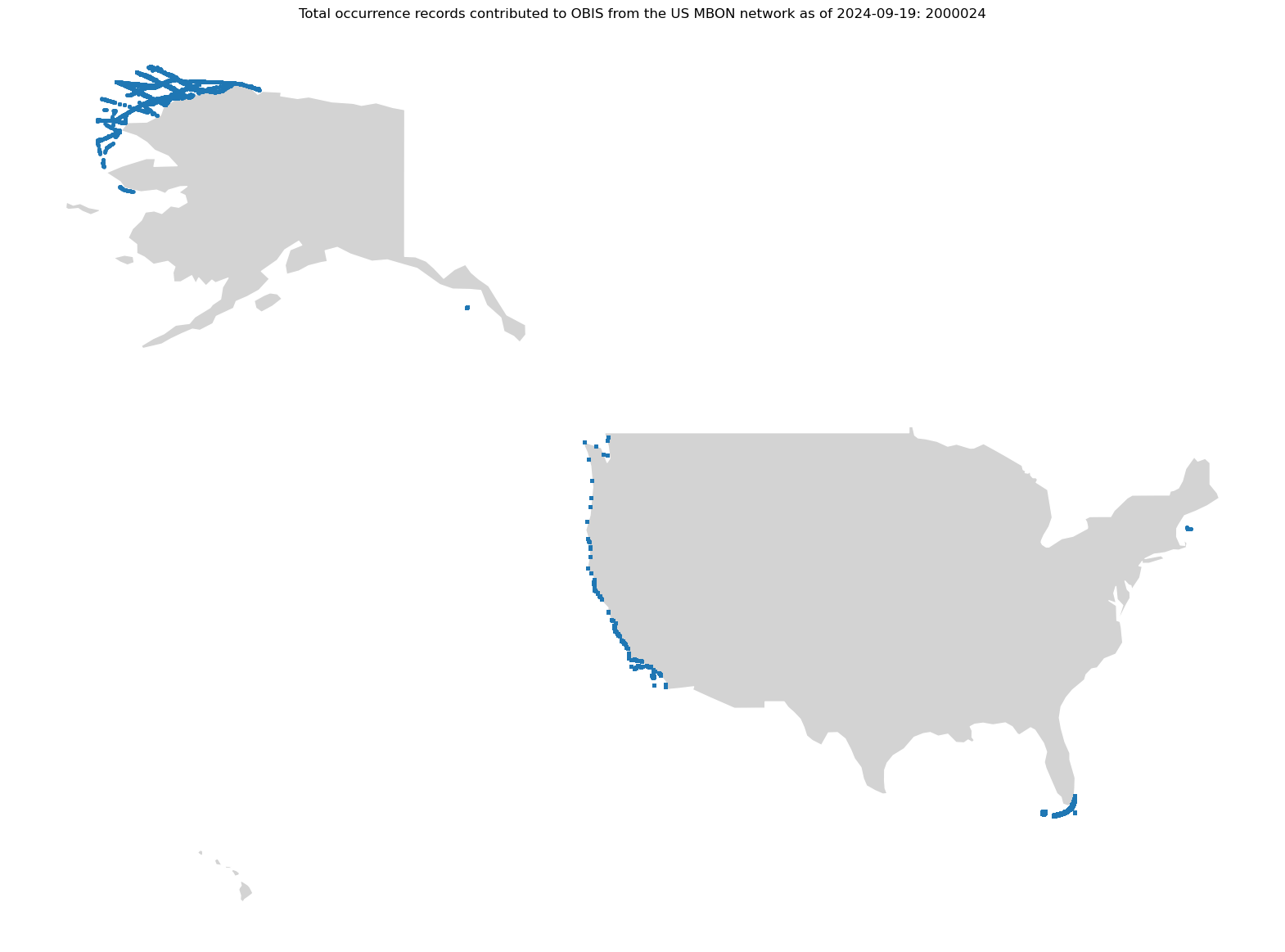

Use pyobis to return all occurrences.#

If you are really interested in all of the records, the code below exemplifies how one might use pyobis to iterate through each dataset and collect only the coordinates to create a map.

Note: This takes a while to run!

First, let’s get all the occurrences as one huge DataFrame.

from pathlib import Path

fname = "combined_high_res.zip"

if Path(fname).exists():

print(

f"Loading cached {fname}. Remove this file if you want to fetch all the points again."

)

combined_high_res = pd.read_csv(fname, compression="zip")

else:

combined_high_res = pd.DataFrame()

query = pyobis.dataset.search(instituteid=institution_id)

df = pd.DataFrame(query.execute())

df_meta = pd.DataFrame.from_records(df["results"])

fields = ["decimalLatitude", "decimalLongitude", "id"]

for datasetid in df_meta["id"]:

query = pyobis.occurrences.search(datasetid=datasetid, fields=fields)

dset = query.execute()

df = pd.DataFrame(dset)

combined_high_res = pd.concat([combined_high_res, df], ignore_index=True)

combined_high_res[["decimalLongitude", "decimalLatitude"]].to_csv(

fname, index=False, compression="zip"

)

Loading cached combined_high_res.zip. Remove this file if you want to fetch all the points again.

Next, let’s convert that DataFrame to a GeoDataFrame and plot the points on a map.

gdf_high_res = geopandas.GeoDataFrame(

combined_high_res[["decimalLatitude", "decimalLongitude"]],

geometry=geopandas.points_from_xy(

combined_high_res.decimalLongitude, combined_high_res.decimalLatitude

),

crs="epsg:4326",

)

fig, ax = plt.subplots(figsize=(20, 15))

gdf_high_res.plot.scatter(

x="decimalLongitude",

y="decimalLatitude",

ax=ax,

s=5,

zorder=10,

rasterized=True,

)

world.loc[world["ISO_A3"] == "USA"].plot(

ax=ax, color="lightgrey", edgecolor="white", zorder=1

)

now = datetime.now()

today = now.strftime("%Y-%m-%d")

ax.set_title(

f"Total occurrence records contributed to OBIS from the US MBON network as of {today}: {gdf_high_res.shape[0]}"

)

ax.set_axis_off();

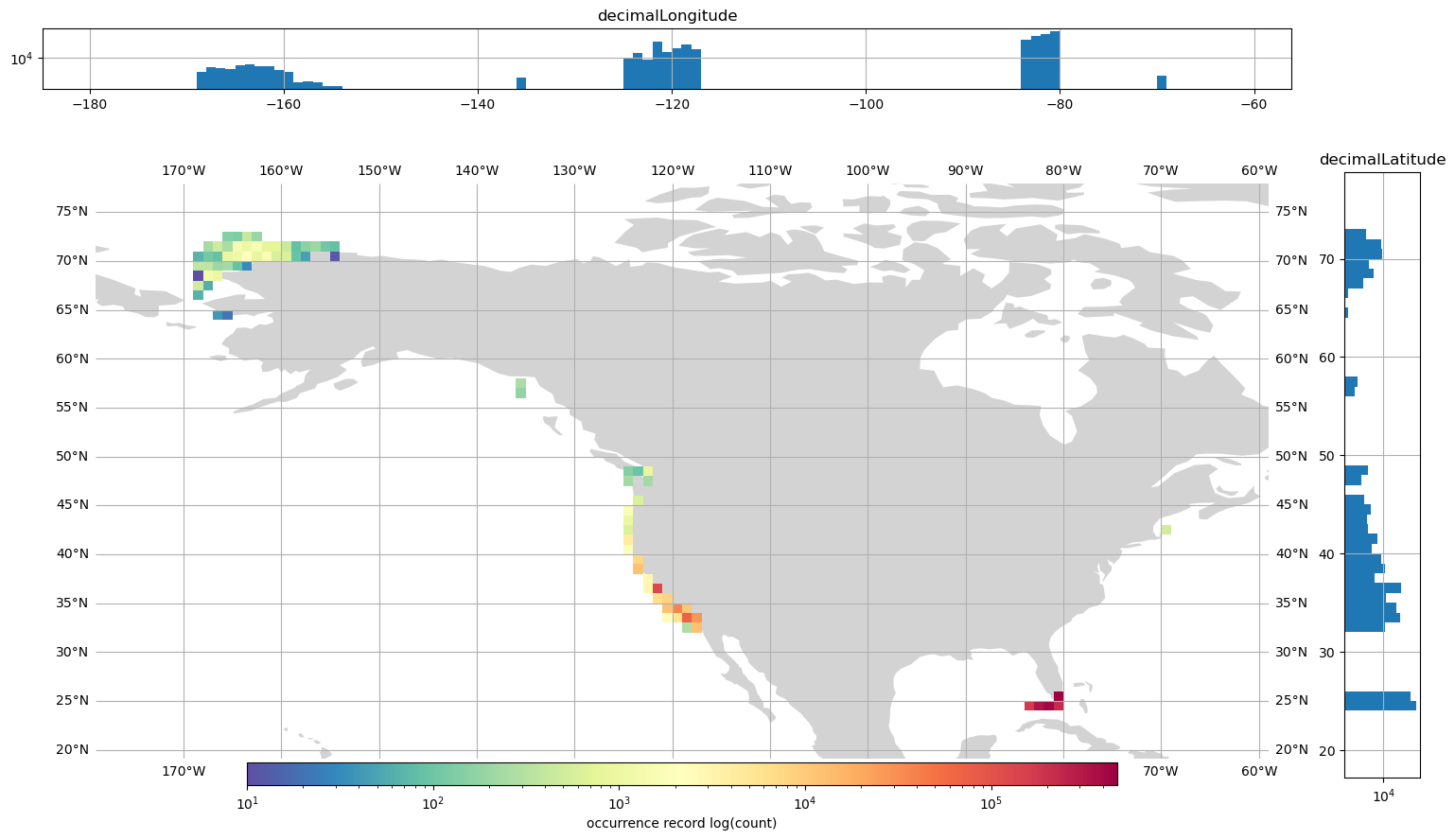

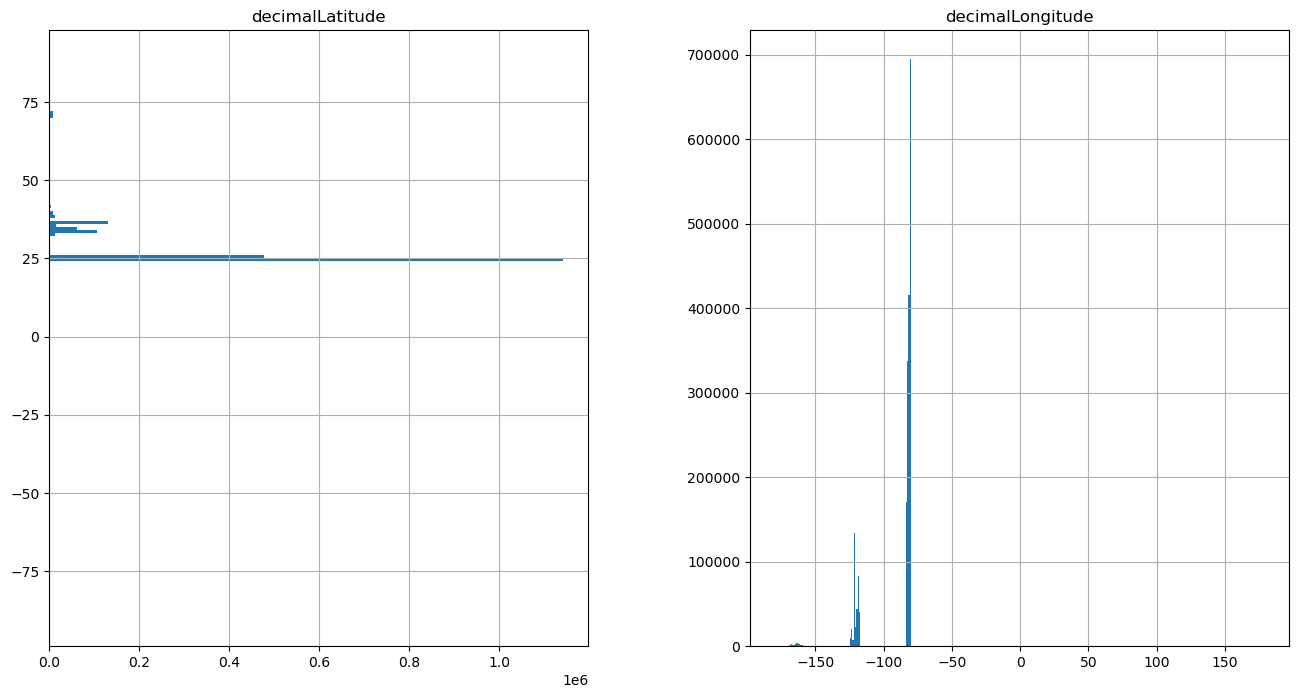

Let’s make some histograms of occurrence record distributions across latitude and longitude#

First, we need to create the bins by which we will calculate the number of occurrences. For this example we will use 1 degree bins.

bins_lat = [i for i in range(-90, 90, 1)]

bins_lon = [i for i in range(-180, 180, 1)]

fig, ax = plt.subplots(nrows=1, ncols=2, figsize=(16, 8))

gdf_high_res.hist(

column="decimalLatitude", bins=bins_lat, orientation="horizontal", ax=ax[0]

)

gdf_high_res.hist(

column="decimalLongitude", bins=bins_lon, orientation="vertical", ax=ax[1]

)

array([<Axes: title={'center': 'decimalLongitude'}>], dtype=object)

Create a grid of observations#

1 degree x 1 degree

import numpy as np

import shapely

# total area for the grid

xmin, ymin, xmax, ymax = [-180.0, -90.0, 180.0, 90]

# how many cells across and down

n_cells = 360

# calculate the size of the cells

cell_size = (xmax - xmin) / n_cells

# create an evenly distributed GeoDataFrame for the grid described above

grid_cells = []

for x0 in np.arange(xmin, xmax + cell_size, cell_size):

for y0 in np.arange(ymin, ymax + cell_size, cell_size):

# bounds

x1 = x0 - cell_size

y1 = y0 + cell_size

grid_cells.append(shapely.geometry.box(x0, y0, x1, y1))

cell = geopandas.GeoDataFrame(grid_cells, columns=["geometry"], crs="EPSG:4326")

# spatial join the OBIS data with the one-degree cells for where OBIS data are within cells.

merged = geopandas.sjoin(gdf_high_res, cell, how="left", predicate="within")

# make a simple count variable that we can sum for each cell

merged["n_occur"] = 1

# Compute stats per grid cell -- aggregate occurences to grid cells with dissolve

dissolve = merged.dissolve(by="index_right", aggfunc="count")

# put this into cell

cell.loc[dissolve.index, "n_occur"] = dissolve.n_occur.values

# calculate occurrence density in km^2 for each cell.

cell["area (km2)"] = cell.to_crs(epsg=3763).area / (1000**2)

cell.plot(column="n_occur", legend=True)

/home/filipe/micromamba/envs/IOOS/lib/python3.12/site-packages/shapely/measurement.py:44: RuntimeWarning: invalid value encountered in area

return lib.area(geometry, **kwargs)

<Axes: >

Next, let’s check out the distribution of the values for n_occur so we can make an appropriatly legible map of the distribution.

cell.describe()

/home/filipe/micromamba/envs/IOOS/lib/python3.12/site-packages/pandas/core/nanops.py:1016: RuntimeWarning: invalid value encountered in subtract

sqr = _ensure_numeric((avg - values) ** 2)

| n_occur | area (km2) | |

|---|---|---|

| count | 80.000000 | 6.449600e+04 |

| mean | 25000.212500 | inf |

| std | 84580.460311 | NaN |

| min | 10.000000 | 1.088584e+02 |

| 25% | 156.500000 | 5.065842e+03 |

| 50% | 594.000000 | 1.071955e+04 |

| 75% | 2487.500000 | 1.742916e+04 |

| max | 477661.000000 | inf |

Since the distribution of n_occur covers a wide range, we will present the concentration and histogram figures as log normal distributions.

So, let’s combine the latitude and longitude histograms with the map to recreate this figure https://bbest.github.io/obis-lat-time-fig.

import geoplot

import geoplot.crs as gcrs

import matplotlib as mpl

import matplotlib.pyplot as plt

import matplotlib.ticker as mticker

norm = mpl.colors.LogNorm(vmin=cell["n_occur"].min(), vmax=cell["n_occur"].max())

projection = gcrs.PlateCarree(central_longitude=-105)

world = world.dissolve()

fig, ax = plt.subplots(

figsize=(16, 8), facecolor="white", subplot_kw={"projection": projection}

)

geoplot.polyplot(

world,

facecolor="lightgray",

edgecolor=None,

projection=projection,

ax=ax,

)

cax = fig.add_axes([0.225, 0.08, 0.575, 0.03])

cell = cell[~cell["n_occur"].isna()]

geoplot.choropleth(

cell,

hue="n_occur",

edgecolor=None,

projection=projection,

ax=ax,

cmap="Spectral_r",

legend=True,

legend_kwargs={

"orientation": "horizontal",

"cax": cax,

"label": "occurrence record log(count)",

},

norm=norm,

)

gl = ax.gridlines(draw_labels=True)

gl.ylocator = mticker.FixedLocator(range(0, 90, 5))

gl.xlocator = mticker.FixedLocator(range(-170, -40, 10))

# Add histogram for distribution

ax2 = fig.add_axes([0.95, 0.090, 0.05, 0.8])

bins_lat = [i for i in range(20, 77, 1)]

gdf_high_res.hist(

column="decimalLatitude", bins=bins_lat, orientation="horizontal", ax=ax2, log=True

)

ax3 = fig.add_axes([0.09, 1, 0.825, 0.08])

bins_lon = [i for i in range(-179, -61, 1)]

gdf_high_res.hist(

column="decimalLongitude", bins=bins_lon, orientation="vertical", ax=ax3, log=True

)

array([<Axes: title={'center': 'decimalLongitude'}>], dtype=object)